AI and Machine Learning for Predictive Trading—Part 1

Artificial intelligence (AI) and Machine learning (ML) have grown exponentially in the past few years due to improvements in computational capacity and the availability of vast amounts of digital data. At its base level, machine learning classifies and organizes large datasets and then applies algorithms to make predictions. Institutional traders use these predictions to create trading signals.

The power of predictive signals is becoming increasingly important as the latency race matures. Trading signals allow algos to be predictive instead of purely reactive, improving their effective “speed” without lowering latency in the traditional sense.

Many prognosticators expect machine learning will become a determining factor for success in institutional trading—in the same way low-latency became table stakes over time. It is useful to understand how electronic trading has developed to reach its current demand for predictive signals.

The Maturing of Speed and the Acceleration of Prediction

When the markets went electronic, some key players realized they could leverage speed to capture alpha. Thus, the emergence of Electronic Communication Networks (ECNs) in the late ’90s led to the increased use of algorithms for trading and the development of high frequency trading (HFT).

The main strategies employed by HFTs are market and latency arbitrage. Both strategies are driven by speed—being the first (or one of the first) to identify and respond to opportunities to trade against mispriced orders. This created an ever-increasing demand for lower latencies or the “race to zero.”

These speeds—first milliseconds, then microseconds, now nanoseconds—were achieved by optimizing data networks and accelerating trading systems. To reduce the latency of market data delivery firms subscribed to direct market feeds from the exchanges, co-located trading systems in the same data centers as the exchanges, and used wireless network technologies (such as microwaves) to move data between data centers. To minimize reaction time to new market data, traders bought the latest processor technology, optimized software performance, and eventually developed purpose-built hardware to execute trading strategies.

The largest players on the buy-side had the resources to make the infrastructure changes required, while the sell-side protected themselves from latency arbitrage by using execution strategies that were less speed dependent. Despite this, a certain level of speed is expected from any firm on the buy or sell-side to achieve best execution—leading to the commodification of low latency.

Those firms that have already reached their individual potential for low latency need to find that next best thing to give them a competitive edge. The only thing faster than near-zero latency is “negative latency” or predictions.

The current confluence of high-speed tech, the development of AI, and the amount of data readily available has created a perfect storm for predictive analysis in institutional trading. In fact, popular media have dubbed it “the era of data-driven trading.” Machine learning is one of the only ways to sift through that data and identify profitable trades.

Machine Learning, Deep Learning, and Artificial Intelligence

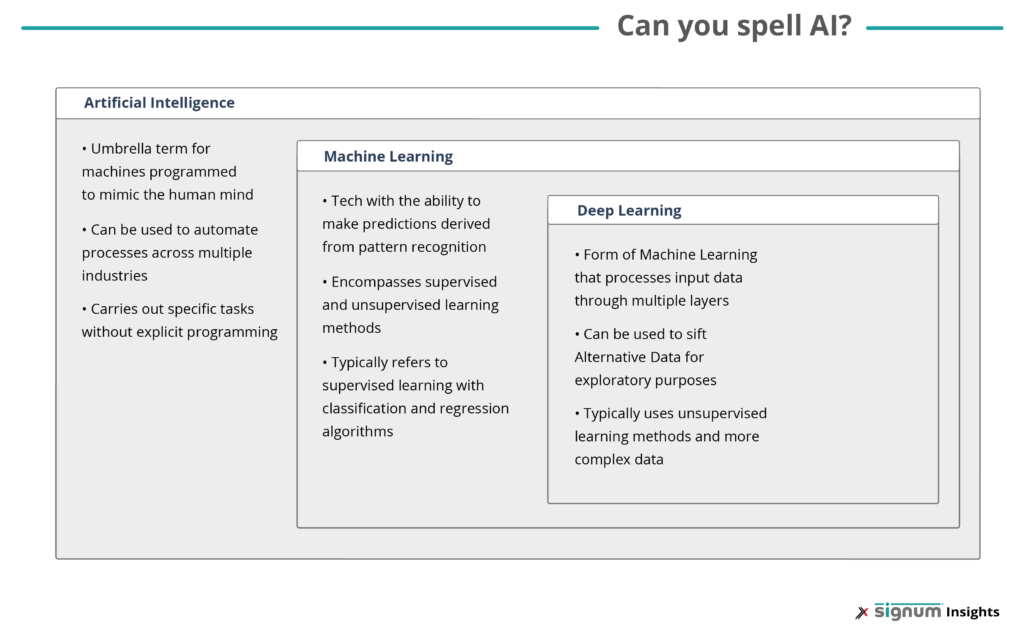

In the 1950s what are now considered statistical algorithms would have been classified as artificial intelligence (AI). However, over the past 70 years, the field has grown in complexity and AI now serves as an umbrella term for machines programmed to replicate the processes of the human mind.

Machine learning is a subset of AI. It is a broad term that describes the technology’s ability to make predictions derived from pattern recognition. Any algorithm that can predict output based solely on its input data—in other words, without a human being explicitly telling the algorithm the function or rule—is considered machine learning.

A step further than that is deep learning. Deep learning is a subset of machine learning that performs a multi-layered analysis and is capable of navigating press releases and social media feeds for human sentiment.

While technically deep learning falls under machine learning, the term “machine learning” is popularly used to describe anything that is not complex enough to be considered deep learning.

The main difference between machine and deep learning is that deep learning is much better at sifting through irregular or alternative data. This works well because training a deep learning algorithm requires vastly more data and today there is a large pool of available alternative data.

Deep learning is able to recognize patterns that are too complex for the human brain to map out. It can prioritize variables in a problem by level of importance, hence, it is referred to as “deep” because it conveys a more layered approach.

Machine learning does perform a similar task, but it can operate on much less data and does better with normalized or regular data sets. Market data, credit information, balance sheets, and most other financial data can be classified and recognized by machine learning.

Supervised and Unsupervised Learning for AI Technology

There are two broadly discussed ways of training AI algorithms: supervised and unsupervised learning. The levels of machine learning not complex enough to be considered deep learning go through a “supervised learning” method, while deep learning can be put through an “unsupervised learning” method.

However, like the taxonomy between machine learning and deep learning, it is not that simple. Forbes highlights how most problems in the world of financial algorithms require a mix of the two, creating “semi-supervised” learning.

Supervised Learning and the Bias-Variance Trade-off

Supervised learning commonly uses normalized data to find patterns based on input that data scientists already know is related to a specific target or prediction to be made. The function relating the two can be known or unknown before supervised learning begins, but the target is always known during training. This allows computer scientists to guide or supervise the learning process to stay in track with the target.

Supervised learning is most often used with classification and regression algorithms such as logistic regression, Naïve Bayes classification, support vector machines, and random forests.

These algorithms have inherent risk factors like errors related to the bias-variance trade-off. Bias represents how accurately a machine learning method can capture the relationship between variables in a specific dataset. Variance describes whether the method will or will not fit other datasets.

The trade-off is that bias and variance are negatively correlated, making it hard for some machine learning methods to find the optimal balance of variance and bias. The goal is to achieve low bias and low variance meaning the algorithm will produce accurate predictions across a wide range of datasets. The paradox of negative correlation is that the more specialized the algorithm is, the less well it generalizes.

For example, an algorithm trained on the price movements of APPL would likely be able to predict APPL’s further movements very well (low bias) but would not perform at the same level of accuracy when applied to AMZN (high variance). This can be a desired effect for some algorithms with specialized goals, but it increases the effort required by firms employing predictive analytics across many securities.

Unsupervised Learning for Exploration

Unsupervised learning occurs when data scientists give the computer unlabeled input data for either clustering or representation learning. The method can discover patterns that were not previously known. It is useful for developing new signals or more narrowly finding new “features” that can be used to improve the performance of an unsupervised learning model.

The inherent risks associated with unsupervised learning are the challenges of testing ground-breaking material. The opacity of unsupervised models precludes their usage by regulated brokers. These firms must comply with disclosure of order routing regulations that require them to precisely understand and describe how they make decisions handling customer orders.

Therefore, semi-supervised learning often creates a middle ground between going in blind and playing it safe.

More to Think About for Part 2

Navigating the hype-heavy AI landscape and understanding how ML technology can be applied to specific trading strategies can be confusing.

The second part of this series will take this knowledge to the next level by detailing how others are already implementing AI-powered predictive signals for institutional trading—how Exegy developed its suite of Signals-as-a-Service—and what considerations must be weighed regarding infrastructure and strategy.

For more information on AI-powered trading signals, low-latency infrastructure, or individual expert advice about your firm’s potential use of Exegy’s Signum, contact us.